For all AI’s limitations, human limitations also need to be considered as part of the equation. What questions you ask and how you ask them can influence the answers that AI generates. Try asking a question in different ways and see if you get the same answer each time. This is known as “prompt engineering”. The more specific your question is, the more specific an answer you’ll receive. Vague questions can result in vague, and misleading, information.

how you ask them can influence the answers that AI generates. Try asking a question in different ways and see if you get the same answer each time. This is known as “prompt engineering”. The more specific your question is, the more specific an answer you’ll receive. Vague questions can result in vague, and misleading, information.

From the World Health Organization (WHO), “While the WHO is enthusiastic about the appropriate use of technologies, including LLMs, to support healthcare professionals, patients, researchers, and scientists, there is concern that caution that would normally be exercised for any new technology is not being exercised consistently with LLMs,” said the organization. It draws attention to the “precipitous adoption of untested systems,” which “could lead to errors by healthcare workers, cause harm to patients, erode trust in AI, and thereby undermine (or delay) the potential long-term benefits and uses of such technologies around the world.”

Issues with AI

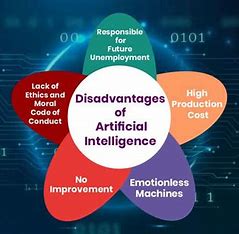

Staying current. Another challenge is that scientific and medical data is not static. It’s constantly evolving and being updated. What was standard of care in 2021, the year to which ChatGPT is current, may not be standard of care in the near future.

AI Failures. There can be serious consequences with AI gets it wrong. Clinician Dr. Joshua Tamayo-Sarver, said that an AI diagnostic assistant he tried, missed life threatening conditions.

Disinformation. Sam Altman, Open AI CEO, has said that among his concerns is the ability of LLM, like ones underpinning ChatGPT, could provide “one-on-one interactive disinformation.” In manufacturing, this just might mean less efficiencies or higher costs, but in healthcare it could significantly jeopardize patient safety. And AI has been shown to more effectively spread disinformation faster than humans. Conversely, it can be used to track disinformation.

Disinformation. Sam Altman, Open AI CEO, has said that among his concerns is the ability of LLM, like ones underpinning ChatGPT, could provide “one-on-one interactive disinformation.” In manufacturing, this just might mean less efficiencies or higher costs, but in healthcare it could significantly jeopardize patient safety. And AI has been shown to more effectively spread disinformation faster than humans. Conversely, it can be used to track disinformation.

Security Risks. Are your employees feeding sensitive business data, including privacy protected details, to chatbots? If so, you should be very worried about security! Cybersecurity companies have reported that over 4% of employees have risked leaking confidential information, client data, source code and privacy regulated information to LLMs (large language models). Stanford Health Care Chief Data Scientist Nigam Shah, also warms that ChatGPT might not protect private data.

Regulation. Geoffrey Hinton, a Canadian and one of three of the “godfathers” of AI, quit Google over AI ethics. Recently, The Center for AI Safety, issued this statement: “Mitigating the risks of extinction from AI should be a global priority alongside other societal risks, such as pandemics or nuclear war.” Interesting that the goal is “mitigating” and not “eliminating” (Vicky Mochama, Opinion, The Globe and Mail, June 3, 2023).

Bias: So much of how accurate AI answers are, depends on the data, the people, used to train it and how it’s asked questions. The information “may be biased, generating misleading or inaccurate information that could pose risks to health, equity, and inclusiveness.” For more on bias, our article on AI Bia.

Supreme Confidence or Lack of Questioning its Own Answers.

Chatbots have an answer for everything. However, while they “can appear authoritative and plausible to an end user”, they “may be completely incorrect or contain serious errors, especially for health-related responses.” LLMs also could be “misused to generate and disseminate highly convincing disinformation in the form of text, audio, or video content that is difficult for the public to differentiate from reliable health content.” Social platforms are a major challenge for this.

Cybersecurity Concerns. Concerns about cybersecurity and medical devices broke into the public view in 2008, when a team of researchers proved that a pacemaker was hackable. Most notably, “Former US Vice President Dick Cheney has for years warned that America needs to be on guard against terrorist threats. As it turns out, he took that warning to heart quite literally. In a recent interview with medical journalist Sanjay Gupta on CBS News’s 60 Minutes, Cheney and one of his doctor’s revealed for the first time that the Vice President’s pacemaker had its wireless feature disabled in 2007, “fearing a terrorist could assassinate the vice president by sending a signal to the device,” as CBS News put it.”

Fifteen years on, the vulnerability of medical devices continues to be a real concern, with the FBI issuing a warning about outdated, legacy devices in September 2022. A noted expert in AI said at a webinar recently that between 2016 and 2020, there was a 17-fold increase in device cybersecurity vulnerabilities reported by the Cybersecurity and Infrastructure Security Agency. A vulnerability is any software flaw that might allow a hacker to access data or remotely take control of a device, potentially causing it to generate inaccurate readings, misleading data or incorrect dosages.

It’s scary that ransomware attacks can render medical devices used in health systems inaccessible. And almost 300 health systems were impacted by ransomware attacks last year.

Ready or not, health care is undergoing a massive transformation driven by artificial intelligence. But medical schools have barely started to teach about AI and machine learning — creating knowledge gaps that could compound the damage caused by flawed algorithms and biased decision support systems.

For more articles on Artificial Intelligence, go to:

- An AI (Artificial Intelligence) Primer

- AI’s Capabilities

- AI Achievements

- ChatGPT (or any AI bot) and Your Medical office

- Teaching AI in Medical School

- Patient Trust in AI Chatbots & ChatGPT

- Competitors to ChatGPT

- AI Policies and Regulatory Challenges

- Ai Bias

- AI’s Limitations, Concerns and Threats

- What AI Can’t and Shouldn’t Do

- AI and Accountability

- The Dangers of AI

- The Future of Generative AI

- AI and Medicine

2Ascribe Inc. is a medical and dental transcription services agency located in Toronto, Ontario Canada, providing medical transcription services to physicians, specialists (including psychiatry, pain and IMEs), dentists, dental specialties, clinics and other healthcare providers across Canada. Our medical and dental transcriptionists take pride in the quality of your transcribed documents. WEBshuttle is our client interface portal for document management. 2Ascribe continues to implement and develop technology to assist and improve the transcription process for physicians, dentists and other healthcare providers, including AUTOfax. AUTOfax works within WEBshuttle to automatically send faxes to referring physicians and dentists when a document is e-signed by the healthcare professional. As a service to our clients and the healthcare industry, 2Ascribe offers articles of interest to physicians, dentists and other healthcare professionals, medical transcriptionists, dental transcriptionists and office staff, as well as of general interest. Additional articles may be found at http://www.2ascribe.com. For more information on Canadian transcription services, dental transcription, medical transcription work or dictation options, please contact us at info@2ascribe.com.